Welcome to the final installment of my 3 part series of posts about the pros and cons of compressed audio. If you haven’t read from the beginning, it’d be a good idea. Here’s a link: Understanding MP3s (and other compressed music) – Part 1

By the end of Part 2 you hopefully have an understanding of the process of compression (i.e. removing sounds that we theoretically won’t hear) and also the impact that this removal has on the overall “picture” created by the sound. For this final part of the article, you need to keep this concept of a musical “picture” in mind because this final concept is all about the hidden magic within the picture, not the individual, identifiable details.

Harmonics

You might have heard of harmonics before. If you’ve played certain musical instruments (particularly stringed instruments), you might have even deliberately created pure harmonics. If you haven’t heard of harmonics, don’t worry – here’s a short explanation.

Anytime you play an instrument that uses a string or air to create sound (i.e. just about any instrument other than electronic synthesizers), you are creating harmonics. Harmonics are the sympathetic vibrations that occur along with the note that you’re creating. Have you ever run your finger around the rim of a glass to create a musical note? That’s the same concept. Your finger running on the edge of the glass creates vibrations. If you get the speed of your finger movements correct, the vibrations you create, match the natural vibration frequency of the glass. As a result, the whole glass vibrates together and forms a beautiful clear note. Different glasses will vibrate at different speeds of movement and will create different notes as a result. This is the concept of harmonics.

Anytime you play an instrument that uses a string or air to create sound (i.e. just about any instrument other than electronic synthesizers), you are creating harmonics. Harmonics are the sympathetic vibrations that occur along with the note that you’re creating. Have you ever run your finger around the rim of a glass to create a musical note? That’s the same concept. Your finger running on the edge of the glass creates vibrations. If you get the speed of your finger movements correct, the vibrations you create, match the natural vibration frequency of the glass. As a result, the whole glass vibrates together and forms a beautiful clear note. Different glasses will vibrate at different speeds of movement and will create different notes as a result. This is the concept of harmonics.

If you were to walk up to a piano and strike the key known as “Middle C”, you would hear a note – just one single note, but that note will have a quality very different to the same note on another piano or on a violin. The reason for this is the creation of resonance and harmonics. To explain this, I’m going to talk about the note called “A” which is a few notes above “Middle C”. I’m using the “A” because it makes the maths easier.

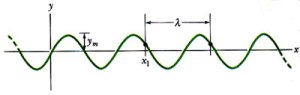

If you now strike the “A” you’ll hear a single note once again. This time, the note will sound higher than the previous “C”. What’s actually happening though is that your ear is receiving vibrations in the ear and these vibrations are moving 440 times every second (440 Hz). However, there are also other vibrations going on and the majority of these vibrations are directly related to the 440 Hz we began with. As the “A” string inside the piano vibrates, it creates waves of vibration. The loudest of these move 440 times per second, but it also creates other waves moving 880 times, 1760 times, 3520 times per second, etc.

Every note created by an acoustic instrument naturally creates these harmonics which go up in doubling increments (i.e. like 1, 2, 4, 8, 16, 32, etc.) Old synthesizers sounded particularly fake because they didn’t recreate these harmonics and the output sounded flat and lifeless. Newer synthesizers create harmonics artificially and have come closer to the sound of the real thing, but there’s still a degree of difference created by the subtleties that can be created by acoustic instruments. A slight difference in strike pressure on a piano, plucking/strumming strength on a guitar or force of air through a trumpet can create a significantly different tone as a result of the different range of vibrations it creates. All of these subtleties are the “magic” that make music so special and exciting.

A quick note: this blog is not an anti electronic music. Electronic instruments (i.e. synthesizers, drum machines, etc.) can create amazing music which is impossible with traditional acoustic instruments. The discussion of acoustic versus electronic instruments is designed purely to illustrate the importance of keeping harmonics where they were originally intended/recorded.

Harmonics, Subtleties & Compression

In reading the section above, you might have wondered why you’ve never heard these harmonics. You might even choose to put on your favourite CD and try to listen for them. You can actually hear these harmonics if you listen carefully, but the key thing to recognise here is that we aren’t consciously aware of them in normal circumstances. The harmonics and subtleties happen “behind the scenes” of the music and are rarely noticed by the casual listener or anyone who is not actively listening for harmonics.

If you now think back to my previous discussion of compression and the removal of sounds that we theoretically don’t hear, you might see the connection. The first things be “compressed” (i.e. removed) are the harmonics and subtle, quiet sounds that create the finest details and tonal qualities of the music. To the casual ear, nothing seems to be missing, but play the same song compressed and uncompressed through good speakers and you might notice a difference that you can’t quite put your finger on. Here’s another visual example.

The following picture is a hi-resolution (1900 x 1200) desktop wallpaper image provided with Microsoft Windows 7. I’ve used it because it has a certain magic about it in terms of its depth and detail.

The next version of that image is at a lower resolution of 800 x 500 pixels (a bit like a lower bit-rate of compression).

Notice there’s a certain level of the “magic” missing from the second image? It’s hard to put a finger on exactly what’s missing, but the image isn’t as instantly captivating and engaging to the eye. It almost looks flatter somehow – less bright and alive.

Here’s one last version at 600 x 375 pixels, making it even lower resolution and stealing more of the “magic”.

Are you seeing a difference? Don’t worry if you’re not. Go back now and take a close look at the textures of the character’s face and the stitching on his costume. As the resolution drops, so does the detail. See it? That’s exactly what’s happening to your music.

Compressed Music in Real Life

Although it’s probably clear by now that my preference is always for uncompressed music (known as lossless music because no detail/information is lost), it’s not always practical. Understanding compression allows you to choose what suits your needs best. Here are some factors to consider when choosing your level of compression (or choosing no compression):

- How much space do you have for your music on your computer, device hard drive, iPod, etc? You’ll need to use compression if your space is limited and you want to store a large number of tracks. Here you need to weigh up quality, quantity and space. You can consider increasing storage space, decreasing the quantity of tracks or increasing the compression (and therefore decreasing the quality of the music).

- Where and how do you listen to your music? If you listen in noisy environments, at very low volume (i.e. background music only) or use low quality speakers/headphones then you might as well use slightly higher compression to maximise the quantity of tracks. The noisy environment issue can be overcome with in-ear earphones and noise cancelling earphones, but the other situations generally mean you can afford to sacrifice quality for quantity.

- How much does it matter to you? After all, you’re the one doing the listening so if you’re happy with music at 128 kbps that’s all that matters. There’s no such thing as a right or wrong level of compression – it’s entirely up to you.

The best way to decide is actually quite simple. Take a well-recorded track (or two) that you really like and use your music player (iTunes, Windows Media Player, etc.) to compress it in different ways. Next, listen to the different versions on your favourite headphones and/or speakers and decide what you’re happy with. Way up the differences you noticed between the different levels of compression and think about how much space you have to store music and then make a decision.

Summary

Compression is a fantastic tool for portable audio and convenience, but if you have no significant space restrictions, I highly recommend sticking with lossless audio (either Apple Lossless Audio Codec – ALAC, Free Lossless Audio Codec – FLAC or Windows Media Audio 9.2 Lossless). You never know when you might upgrade your speakers or headphones and even if you can’t hear a difference now, you might be amazed at the benefits you get with that next pair of speakers or the next set of headphones! Don’t give up the magic of the music unless you absolutely have too!